Production like Kubernetes on Raspberry Pi: Setup

In this series i want to create a production grade kubernetes cluster which can be very useful at least in a home environment but maybe also for some small bussiness environments.

The Plan

- Part 1: Setup a 3 node k3s cluster with 2 Master and 1 worker nodes (more worker nodes can be added later on)

- Part 2: Deploy metallb (load-balancer) to get traffic routed to the cluster.

- Part 3: Deploy traefik as a ingress controller to reach services by dns name

- Part 4: Deploy cert-manager for automatic creation of let’s encrypt certificates

- Part 5: Deploy longhorn for persistent-storage. (An alternative is using an NFS-Server as described here)

- Part 6: Cluster backup with velero.

- Part 7: Metrics with prometheus and logging with loki

- Part 8: Use flux-cd for storing cluster description in git for rebuilding the cluster

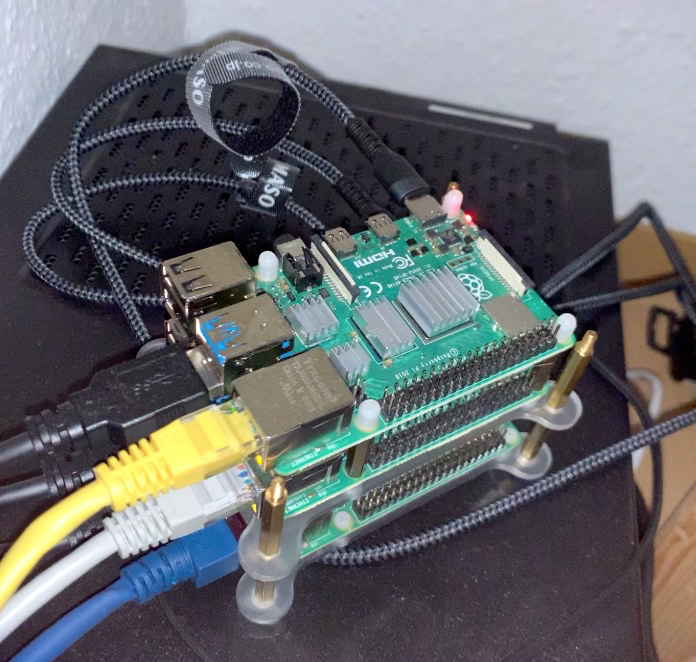

The hardware

- 1x Raspberry Pi 4 — 8GB

- 2x Raspberry Pi 4–4GB

- 3x SSD-Drives as storage

- 3x USB-to-SATA Adapter (StarTech.com USB312SAT3CB)

- UGREEN 65W USB-C Power-Adapter

Install the operating system

I’am using Ubuntu-Server on my nodes.

Download the right arm64 images from here.

Install them with the image tool of your choice on your sd-cards or like me on your SSDs.

Optional: Disable WIFI, Bluetooth and get more memory

If you use the nodes only for kubernetes you can disable bluetooth and get some of the graphic memory. And when you only use Ethernet you can disable wifi.

Open the config.txt with:

sudo nano /boot/firmware/config.txtAnd add the following lines:

gpu_mem=16

dtoverlay=disable-bt

dtoverlay=disable-wifi On ubuntu you probably need to add cgroup_enable=cpuset cgroup_memory=1 cgroup_enable=memory to the end of /boot/firmware/cmdline.txt,

Save both files and reboot the node.

Install K3s

Now it’s time to install k3s on the nodes. I’am using the excelent k3sup-tool from Alex Ellis.

Install k3sup

curl -sLS https://get.k3sup.dev | sh

sudo install k3sup /usr/local/bin/And test with:

k3sup --helpAccess nodes with ssh

Create a ssh-key for easily and securly access the nodes.ssh-keygen -t ed25519 -C "yournameOrEmail"

ssh-keygen will create a public and a private key.

Copy-and-paste the public key to the

nano ~/.ssh/authorized_keysfiles on every node.

More Info on generating a key here

Optional: Add nodes to ssh config

For easy access you can add your nodes to ssh config file. Open or create with

nano ~/.ssh/configand add the three nodes:

Host kube1

HostName 192.168.2.1

User ubuntu

IdentityFile ~/.ssh/<private-ssh-key-file>Host kube2

HostName 192.168.2.2

User ubuntu

IdentityFile ~/.ssh/<private-ssh-key-file>Host kube3

HostName 192.168.2.3

User ubuntu

IdentityFile ~/.ssh/<private-ssh-key-file>

Now you can access the kube1 node for example with:

ssh kube1Preparations

You should know:

- The ip of the first node

- The ip of the second node

- The ip of the third node

Install first master node

k3sup install --ip <ip-node-1> \

--user ubuntu \

--cluster \

--k3s-extra-args '--disable servicelb,traefik,local-storage' \

--ssh-key ~/.ssh/<private-ssh-key>Default load-balancer, traefik and local-storage-provider are disabled because we are using own deployments.

Install second master node

k3sup join --ip <ip-node-2> \

--user ubuntu \

--server --k3s-extra-args '--disable servicelb,traefik,local-storage' \

--server-ip <ip-node-1> --server-user ubuntu \

--ssh-key ~/.ssh/<private-ssh-key>Install the worker node

k3sup join --ip <ip-node-3> \

--user ubuntu \

--server-ip <ip-node-1> --server-user ubuntu \

--ssh-key ~/.ssh/<private-ssh-key>Test cluster setup

With kubectl installed

you can test if your cluster is working correctly with:

kubectl get nodesYou should see your nodes with Ready-Status

NAME STATUS ROLES AGE VERSION

kube1 Ready control-plane,etcd,master 118d v1.22.5+k3s1

kube2 Ready control-plane,etcd,master 118d v1.22.5+k3s1

kube3 Ready <none> 118d v1.22.5+k3s1Summary

- We installed and configured Ubuntu server on 3 raspberry pis

- We installed k3sup

- We setup a ssh-connection to the nodes

- We installed k3s with k3sup

What’s next

In the next part we install a load-balancer which acts as an access-point to the cluster.